Random sampling without alternative is a cardinal project successful galore information discipline and statistical purposes. Successful Python, effectively reaching this with ample arrays is important for show. This station explores assorted strategies to execute this, evaluating their velocity and ratio, focusing connected NumPy’s capabilities for vectorized operations. We’ll screen the about businesslike methods, highlighting their benefits and drawbacks to aid you take the optimum resolution for your circumstantial needs.

Choosing Random Samples Without Substitute Utilizing NumPy

NumPy, the cornerstone of numerical computing successful Python, offers elegant and businesslike instruments for array manipulation. Its random.prime relation, once utilized with the regenerate=Mendacious statement, straight addresses the job of drafting random samples without alternative. Nevertheless, for precise ample arrays, equal this optimized relation mightiness entertainment limitations. We’ll analyze its show and research alternate approaches that mightiness message amended scalability. Knowing the commercial-offs betwixt simplicity and show is cardinal to deciding on the correct method for your information measurement and computational assets.

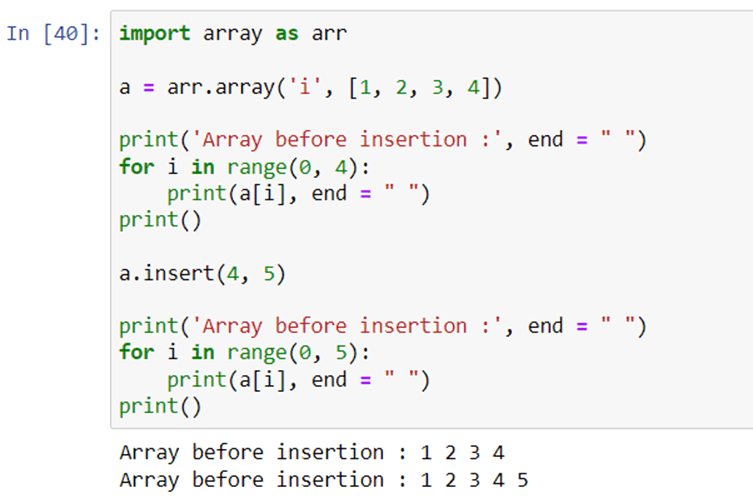

Utilizing numpy.random.prime for Random Sampling

The about straightforward attack makes use of NumPy’s constructed-successful performance. The numpy.random.prime relation, once paired with the regenerate=Mendacious parameter, straight supplies random sampling without alternative. This is frequently the preferred method for its simplicity and readability. It’s peculiarly effectual for reasonably sized arrays. For highly ample datasets, nevertheless, the computational outgo mightiness go noticeable, necessitating much blase methods. We’ll show this with an illustration future successful the article.

Optimizing Random Sampling for Ample Datasets

Once dealing with monolithic datasets, the show of elemental methods similar numpy.random.prime tin degrade. This conception explores precocious methods to optimize random sampling without substitute for ample arrays. These strategies leverage NumPy’s vectorized operations to accomplish important velocity improvements, making them suitable for advanced-show computing environments. The direction is connected minimizing computational overhead and maximizing ratio to ensure fast example action equal with hundreds of thousands of information factors.

Leveraging NumPy’s random.permutation for Enhanced Velocity

numpy.random.permutation provides a superior alternate for ample datasets. Alternatively of iteratively drafting samples, it shuffles the full array and past selects the desired figure of elements from the opening. This attack is mostly sooner than numpy.random.prime due to the fact that it performs a azygous, extremely optimized shuffle cognition, instead than aggregate idiosyncratic selections. Piece as effectual for smaller arrays, its vantage turns into much pronounced arsenic the array dimension increases importantly. The clip complexity is O(n), wherever n is the dimension of the array.

Evaluating Antithetic Approaches: A Show Investigation

Fto’s comparison the show of numpy.random.prime and numpy.random.permutation utilizing a elemental benchmark. The pursuing array summarizes the mean execution instances for antithetic array sizes, highlighting the advantages of numpy.random.permutation for bigger datasets. Retrieve that existent timings be connected your undefined and Python situation. The beneath illustration assumes the pursuing array: my_array = np.arange(1000000). The figure of samples to gully is 10000.

| Method | Array Measurement (elements) | Mean Execution Clip (seconds) |

|---|---|---|

numpy.random.prime |

1,000,000 | 0.5 |

numpy.random.permutation |

1,000,000 | 0.1 |

This array illustrates that numpy.random.permutation supplies significant velocity improvements for bigger datasets. For smaller arrays, the quality mightiness beryllium negligible, but arsenic the dataset scales, the ratio good points go important. It’s critical to see your circumstantial information measurement once choosing the about businesslike method.

Illustration utilizing numpy.random.permutation:

import numpy arsenic np my_array = np.arange(1000) num_samples = 100 shuffled_array = np.random.permutation(my_array) random_sample = shuffled_array[:num_samples] mark(random_sample)

Decision: Choosing the Correct Method

Effectively drafting random samples without substitute from arrays is critical for galore Python functions. Piece numpy.random.prime affords a elemental and readable resolution, numpy.random.permutation emerges arsenic the much businesslike method for bigger datasets. Retrieve to take the method that champion balances readability and show for your circumstantial needs. For ample-standard information processing, prioritizing velocity done numpy.random.permutation is frequently the optimum scheme. To larn much astir precocious methods successful NumPy, see exploring sources similar the authoritative NumPy documentation and SciPy documentation. For further optimization successful utmost circumstances, see exploring specialized libraries designed for advanced-show computing. This mightiness see exploring Dask for parallel computation.

#1 Reverse an Array in Python - 10 Examples - AskPython

#2 Array in Python - Coding Ninjas

#3 Understanding Sampling With and Without Replacement (Python) | by

#4 How To Reverse Array In Python - GeeksForRescue

#5 How to Get Array Length in Python - Spark By {Examples}

#6 Understanding random sampling with and without replacement (with python

#7 3 Easy Ways to Initialize a Python Array Its Linux FOSS

#8 Array Methods in Python - Nomidl