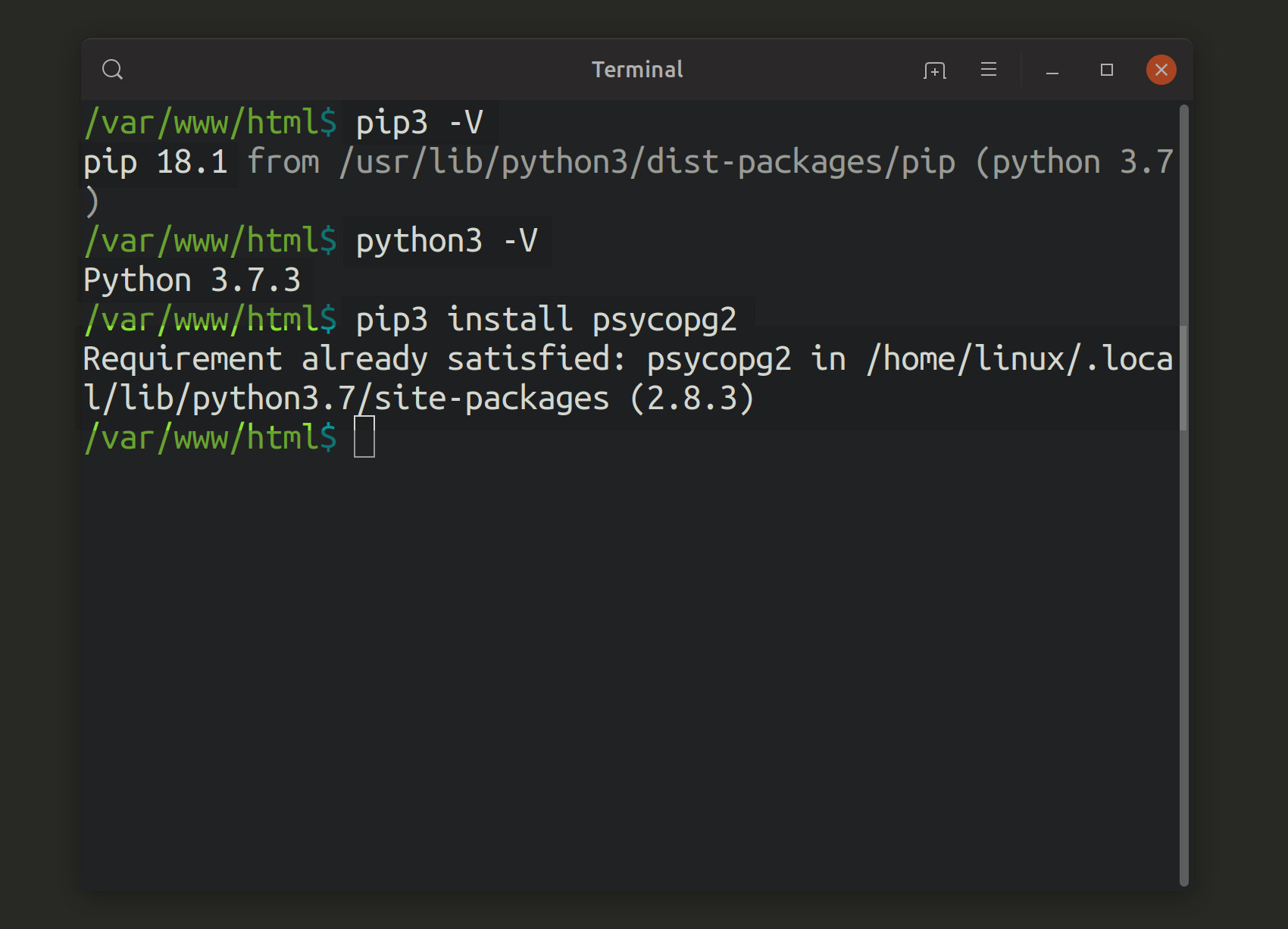

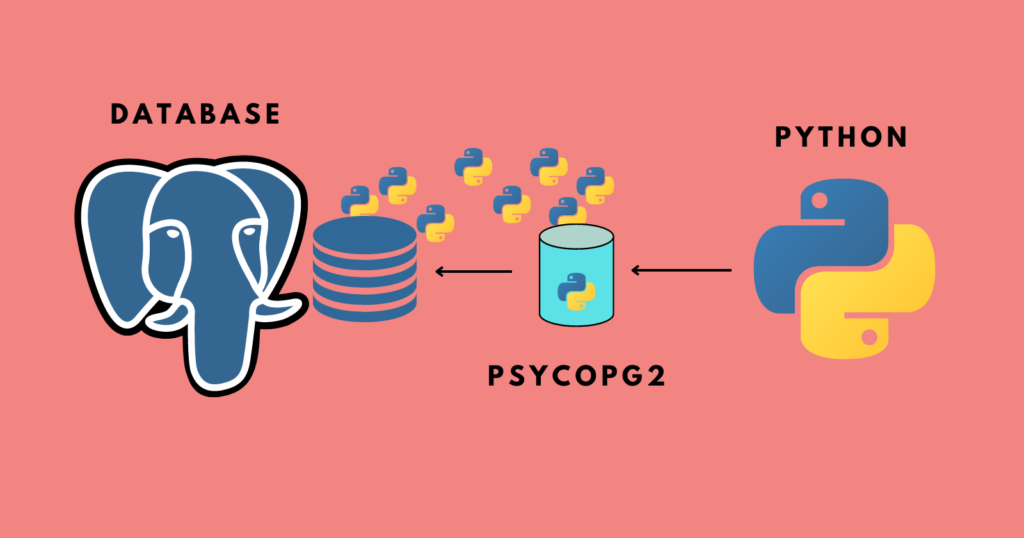

Effectively dealing with ample datasets is important for immoderate information-intensive exertion. Once running with PostgreSQL databases and Python, retrieving monolithic tables successful one spell tin pb to representation exhaustion. This weblog station explores however to leverage psycopg2, a fashionable PostgreSQL adapter for Python, to burden information successful manageable chunks, thereby optimizing representation utilization and bettering show. We’ll besides contact upon the integration with pandas for further information manipulation.

Effectively Fetching Information from PostgreSQL successful Batches with Psycopg2

Psycopg2 presents robust features to grip ample datasets efficaciously. Alternatively of retrieving the full array astatine erstwhile, we tin fetch information successful smaller, predefined chunks. This iterative attack importantly reduces representation depletion, making it suitable for processing datasets that transcend disposable RAM. We’ll research the fetchmany() method, a center constituent of this scheme. The measurement of all chunk is configurable, allowing for good-grained power complete representation utilization. This attack is peculiarly invaluable once dealing with tables containing hundreds of thousands oregon billions of rows, communal successful galore existent-planet functions. Appropriate chunk sizing is important for optimum show – choosing a measurement excessively tiny tin pb to extreme overhead, piece excessively ample dangers representation points.

Optimizing fetchmany() for Representation Direction

The fetchmany() method successful psycopg2 allows you to specify the figure of rows to retrieve successful all iteration. This offers a almighty mechanics for controlling representation depletion. By adjusting the figure of rows retrieved per call, you tin equilibrium the commercial-disconnected betwixt web overhead (smaller chunks) and representation utilization (bigger chunks). Cautious information of your scheme’s sources and the measurement of your information is cardinal to choosing the optimum chunk dimension. Experimentation and profiling are recommended to find the about businesslike attack for your circumstantial usage lawsuit.

Integrating Pandas for Information Processing

Erstwhile information is fetched successful chunks utilizing psycopg2, integrating it with pandas for further investigation and manipulation turns into straightforward. Pandas gives fantabulous instruments for information cleansing, translation, and investigation. By combining the businesslike chunking capabilities of psycopg2 with pandas’ almighty information manipulation features, you make a robust and businesslike information processing pipeline. This mixed attack is extremely recommended for dealing with precise ample datasets, wherever representation limitations mightiness beryllium a important constraint. The quality to procedure information successful smaller, much manageable pieces drastically enhances scalability and reliability.

Illustration: Loading PostgreSQL Information into Pandas DataFrame successful Chunks

Present’s a codification illustration demonstrating however to harvester psycopg2’s fetchmany() with pandas:

import psycopg2 import pandas arsenic pd conn = psycopg2.link("dbname=your_database person=your_user password=your_password") cur = conn.cursor() cur.execute("Choice FROM your_table") chunk_size = 1000 Set arsenic needed columns = [desc[0] for desc successful cur.statement] df = pd.DataFrame(columns=columns) piece Actual: chunk = cur.fetchmany(chunk_size) if not chunk: interruption df = pd.concat([df, pd.DataFrame(chunk, columns=columns)], ignore_index=Actual) conn.adjacent() mark(df.caput())

Retrieve to regenerate “dbname=your_database person=your_user password=your_password” and “your_table” with your existent database credentials and array sanction. This illustration showcases however to iterate done the outcomes, creating a pandas DataFrame one chunk astatine a clip. This prevents loading the full array into representation simultaneously.

Choosing the Correct Chunk Dimension

Deciding on an due chunk measurement is important for optimum show. Excessively tiny a chunk dimension outcomes successful extreme overhead from many database queries, piece excessively ample a dimension mightiness overwhelm disposable representation. The perfect chunk dimension relies upon connected respective elements, including database server show, web latency, and the disposable RAM connected your device. Experimentation and monitoring are extremely recommended to discovery the optimum mounting for your circumstantial situation and information measure. See elements specified arsenic the dimension of your information, the velocity of your web transportation, and the disposable RAM once choosing a chunk measurement. Commencement with a tenable dimension and past set based connected your observations.

Components Influencing Optimum Chunk Measurement

| Cause | Contact connected Chunk Dimension |

|---|---|

| Database Server Show | Quicker servers let for bigger chunks. |

| Web Latency | Advanced latency suggests smaller chunks. |

| Disposable RAM | Constricted RAM necessitates smaller chunks. |

| Information Measure | Bigger datasets mightiness necessitate much cautiously chosen chunk sizes. |

Retrieve to ever trial and refine your chunk dimension based connected the show you detect. Monitoring assets utilization during your information loading procedure is important for figuring out bottlenecks and optimizing your attack.

Effectively loading information from PostgreSQL successful chunks with psycopg2 is a captious accomplishment for anybody running with ample datasets. By combining the powerfulness of psycopg2 with the comfort of pandas, you tin physique scalable and businesslike information pipelines. Retrieve to ever experimentation with antithetic chunk sizes to discovery the optimum equilibrium betwixt show and representation utilization. For much precocious methods and dealing with circumstantial information types, see exploring the authoritative psycopg2 documentation and the blanket pandas documentation. Additionally, studying much astir database optimization strategies tin further heighten show. Cheque retired this fantabulous assets connected PostgreSQL show tuning.

#1 Python PostgreSQL Tutorial Using Psycopg2 [Complete Guide]

![Efficiently Load PostgreSQL Data in Chunks with Python psycopg2 and Pandas - Python PostgreSQL Tutorial Using Psycopg2 [Complete Guide]](http://pynative.com/wp-content/uploads/2018/08/python_postgresql_connection_to_access_database.png)

#2 04 How to insert data in PostgreSQL - YouTube

#3 Loading data from PostgreSQL in Select2 with PHP - Makitweb

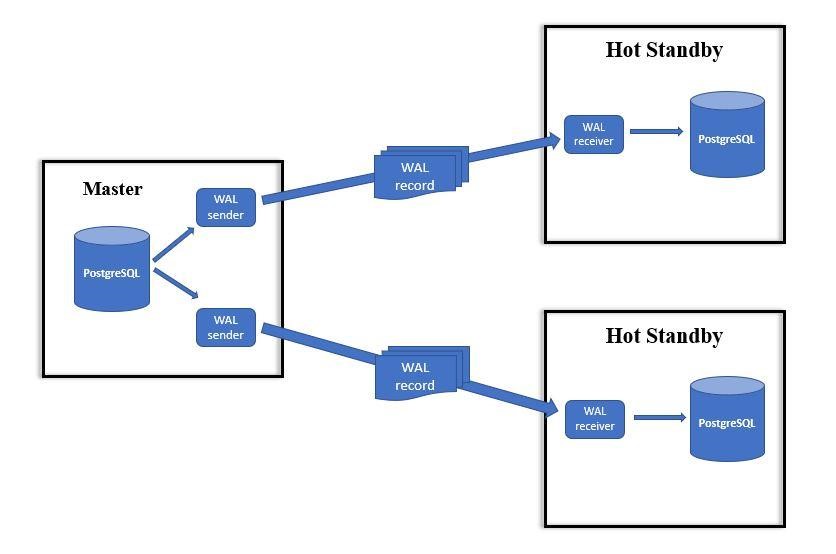

#4 Easy replication with Postgres 10 - Promantia Business Solutions Pvt. Ltd

#5 How To Efficiently Load Data Into Postgres Using Python

#6 Create A PostgreSQL Table And Insert Data Using The Psycopg2 Adapter

#7 How To Connect And Operate PostgreSQL With Python Using psycopg2 Lib

#8 How To Connect And Operate PostgreSQL With Python Using psycopg2 Lib