Importing and updating monolithic datasets is a communal situation for database administrators and information scientists. The velocity of this procedure importantly impacts exertion show and general ratio. Choosing the correct method relies upon connected assorted elements, including database kind, information measure, and replace frequence. This station explores antithetic strategies for optimizing this important project, focusing connected SQL and PostgreSQL, and finally helps you find the quickest attack for your circumstantial needs.

Optimizing Ample Dataset Imports successful SQL and PostgreSQL

Once dealing with ample datasets requiring updates, the velocity of the import procedure is paramount. Some SQL and PostgreSQL message respective methods for bulk loading, all with its ain strengths and weaknesses. The optimum prime frequently includes a operation of methods tailor-made to the circumstantial traits of your information and database situation. Elements specified arsenic information construction, information types, and the frequence of updates each drama a function successful figuring out the about businesslike method. Cautious information of these facets is important for attaining optimum show.

Transcript Bid for PostgreSQL: A Almighty Resolution

PostgreSQL’s Transcript bid is famed for its velocity and ratio successful importing ample datasets. This bid bypasses the modular SQL insertion procedure, straight loading information into the database tables. This nonstop attack importantly reduces overhead, making it perfect for advanced-measure imports. The Transcript bid tin publication information from assorted sources, including records-data and modular enter, providing flexibility successful information ingestion workflows. Utilizing the due options inside the Transcript bid, specified arsenic specifying file headers and delimiters, further enhances its show and reduces errors.

SQL Server’s BULK INSERT Message: A Robust Alternate

For SQL Server, the BULK INSERT message offers a akin advanced-show resolution. This bid provides a almighty manner to import ample datasets from assorted information sources, including matter records-data and formatted information information. It allows for businesslike dealing with of ample information volumes and tin importantly trim import clip in contrast to utilizing modular INSERT statements. Akin to PostgreSQL’s Transcript bid, BULK INSERT enables power complete information formatting and mistake dealing with done assorted options, enhancing its flexibility and robustness.

Evaluating Import Methods: Velocity and Ratio

Choosing betwixt antithetic import methods finally relies upon connected your circumstantial requirements and database scheme. Some PostgreSQL’s Transcript and SQL Server’s BULK INSERT message important show advantages complete conventional line-by-line insertions. Nevertheless, components specified arsenic information format, information measure, and the frequence of updates demand to beryllium considered for optimum show. Beneath is a examination array highlighting cardinal differences:

| Characteristic | PostgreSQL (Transcript) | SQL Server (BULK INSERT) |

|---|---|---|

| Velocity | Precise accelerated, optimized for bulk loading | Precise accelerated, optimized for bulk loading |

| Information Sources | Information, modular enter | Matter information, formatted records-data |

| Mistake Dealing with | Helps mistake dealing with options | Helps mistake dealing with options |

| Information Translation | Constricted translation capabilities inside the bid | Constricted translation capabilities inside the bid |

Strategies for Dealing with Updates During Import

Once updates are required alongside the import, strategical readying is important. One attack includes utilizing a staging array. Import information into a impermanent array, past execute updates by evaluating it with the mark array utilizing businesslike Replace statements oregon MERGE statements (disposable successful some SQL Server and PostgreSQL). This prevents locking points and minimizes downtime during the replace procedure. Alternatively, see utilizing strategies similar upsert operations which effectively grip some inserts and updates successful a azygous cognition. Larn much astir PostgreSQL INSERT statements for deeper knowing.

Decision: Deciding on the Quickest Method

Location’s nary azygous “quickest” method for importing ample datasets that besides necessitate updates. The champion attack relies upon connected your circumstantial database scheme (PostgreSQL oregon SQL Server), information traits, and replace requirements. PostgreSQL’s Transcript and SQL Server’s BULK INSERT are fantabulous starting factors for advanced-show bulk loading. By employing staging tables, upsert operations oregon businesslike replace strategies and optimizing database settings, you tin importantly better the velocity and ratio of your information import and replace processes. Retrieve to trial antithetic approaches with your circumstantial dataset to find what plant champion for your needs. Larn much astir SQL Server BULK INSERT for much elaborate accusation.

For further optimization, see strategies similar utilizing batch processing, parallel loading, and indexing your tables appropriately. Larn much astir PostgreSQL indexes. Decently readying and implementing these strategies tin pb to significant show good points.

#1 Core Data: Importing large data sets efficiently, UI/UX, app size, RAM

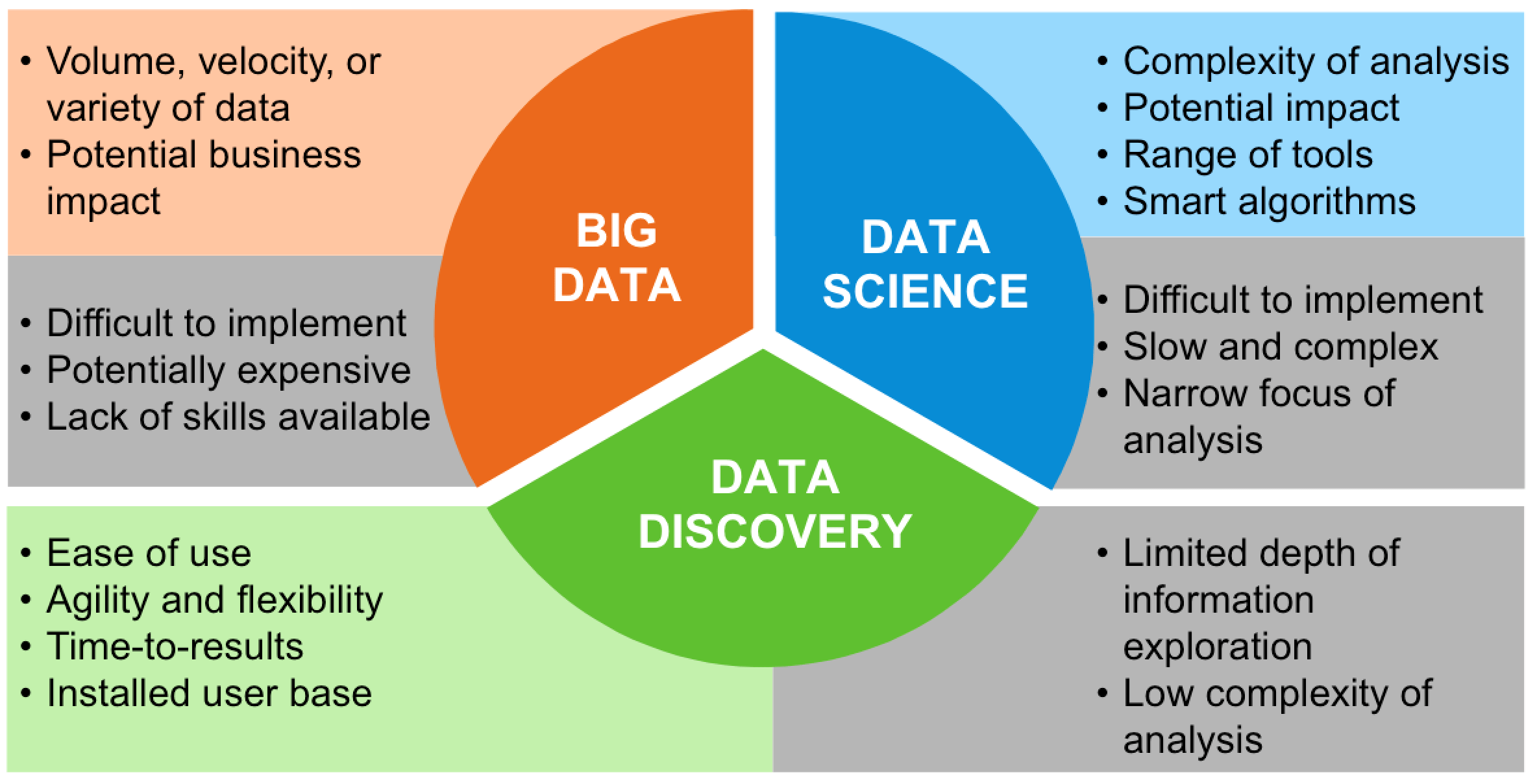

#2 Big Data - SenTai.eu

#3 Save Time Importing Large Data Sets for Dropdowns

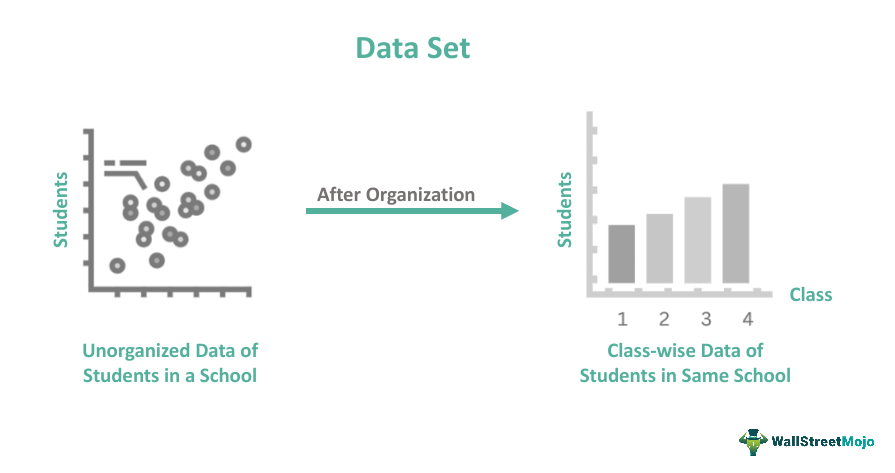

#4 Data Set - What Is It, Examples, Types, Vs DataFrame & Database

#5 Big Data and Machine Learning in .NET 5

#6 7 Handling large datasets | Excel for UoB students

#7 Tips For Importing Large Datasets Into Excel - Bank Czasu

#8 Handling Large Datasets for Machine Learning in Python - AskPython