Prefect, with its integration with Databricks, gives a almighty level for orchestrating analyzable information pipelines. Nevertheless, debugging and monitoring these pipelines frequently requires knowing however to acquire suggestions from inside the travel itself. This station addresses the communal motion of however to efficaciously log and position output from your Databricks duties inside a Prefect travel.

Getting Output from Your Prefect-Databricks Travel

Debugging and monitoring your Prefect flows that work together with Databricks is important for ensuring creaseless cognition. One of the about straightforward methods to accomplish this is by strategically utilizing logging statements inside your Databricks duties. These logs tin past beryllium accessed done the Prefect UI, providing existent-clip insights into the execution of your pipeline. Effectual logging offers important accusation astir the advancement, successes, and possible failures inside your Databricks jobs, tremendously aiding successful troubleshooting and optimization. This attack is cold superior to relying solely connected the Databricks UI for intricate pipeline monitoring due to the fact that it integrates the Databricks occupation’s advancement straight into your general Prefect workflow position. By analyzing the logs, you tin place bottlenecks oregon errors promptly and effectively, frankincense enhancing the full pipeline’s reliability and show.

Using Prefect’s Logging Capabilities

Prefect gives respective almighty methods to grip logging. The easiest method entails utilizing Python’s constructed-successful logging module inside your Databricks project. This allows you to direct messages straight to the Prefect logger, which volition past beryllium available successful the Prefect UI. You tin specify antithetic log ranges (DEBUG, INFO, Informing, Mistake, Captious) for granular power complete the kind of accusation recorded. Retrieve to configure your logger appropriately to ensure the messages range the Prefect UI, instead than conscionable the Databricks bunch logs. Decently structured logging makes debugging importantly simpler, allowing for a fast recognition of errors and show points inside your Prefect-Databricks workflows.

Leveraging mark Statements (with Warning)

Piece mark statements mightiness look similar a speedy resolution, they’re not perfect for robust logging successful Prefect. The output from mark statements isn’t persistently captured and displayed inside the Prefect UI, starring to possibly lacking important accusation. Piece you mightiness seat any output successful the Databricks bunch logs, this attack lacks the centralized visibility that Prefect’s logging scheme supplies. So, piece handy for speedy checks during improvement, trust connected the devoted Prefect logging methods for exhibition-fit pipelines.

Precocious Logging Strategies for Prefect-Databricks

For much blase logging, see integrating a structured logging room similar loguru oregon structlog. These libraries message features similar JSON serialization of log messages, making it simpler to analyse logs programmatically. Furthermore, they tin frequently combine seamlessly with assorted logging backends, allowing flexibility successful however you shop and entree your logs. This attack affords important advantages once running with ample-standard information pipelines, wherever businesslike log investigation is indispensable for care and troubleshooting. Structured logging facilitates the instauration of undefined dashboards and automated alerts, enhancing the monitoring and care capabilities of your full pipeline.

Illustration: Utilizing the logging Module

Present’s a basal illustration of however to usage Python’s logging module inside a Prefect project moving connected Databricks:

import logging logger = logging.getLogger(__name__) def my_databricks_task(): logger.info("Databricks project started.") ... your Databricks codification ... logger.info("Databricks project completed.")

Retrieve to configure the base logger appropriately to ensure these messages are dealt with by Prefect.

Evaluating Logging Methods

| Method | Prefect UI Visibility | Easiness of Usage | Scalability |

|---|---|---|---|

mark |

Mediocre | Advanced | Debased |

logging module |

Bully | Average | Average |

loguru/structlog |

Fantabulous | Debased | Advanced |

Take the method that champion fits your needs and complexity of the task. For elemental duties, the logging module whitethorn suffice. For analyzable pipelines and precocious logging capabilities, see loguru oregon structlog.

For further accusation, mention to the authoritative Prefect documentation and Databricks documentation. You tin besides research assemblage assets similar Stack Overflow for further aid.

Decision

Efficaciously logging your Databricks duties inside a Prefect travel is important for debugging, monitoring, and sustaining robust information pipelines. Piece elemental mark statements mightiness look interesting, using Prefect’s logging capabilities oregon structured logging libraries presents cold higher advantages successful status of visibility, scalability, and maintainability. Take the logging method that champion suits your needs and ever prioritize structured logging for exhibition environments. By implementing these strategies, you tin importantly better the reliability and ratio of your Prefect-Databricks workflows.

#1 Bakugou Inspired Bow - Etsy | Bows, Etsy, Hair bows

#2 Can I run a flow once but start it in the future? - Help - Prefect

#3 Prefect Brings Dataflow Automation to Databricks

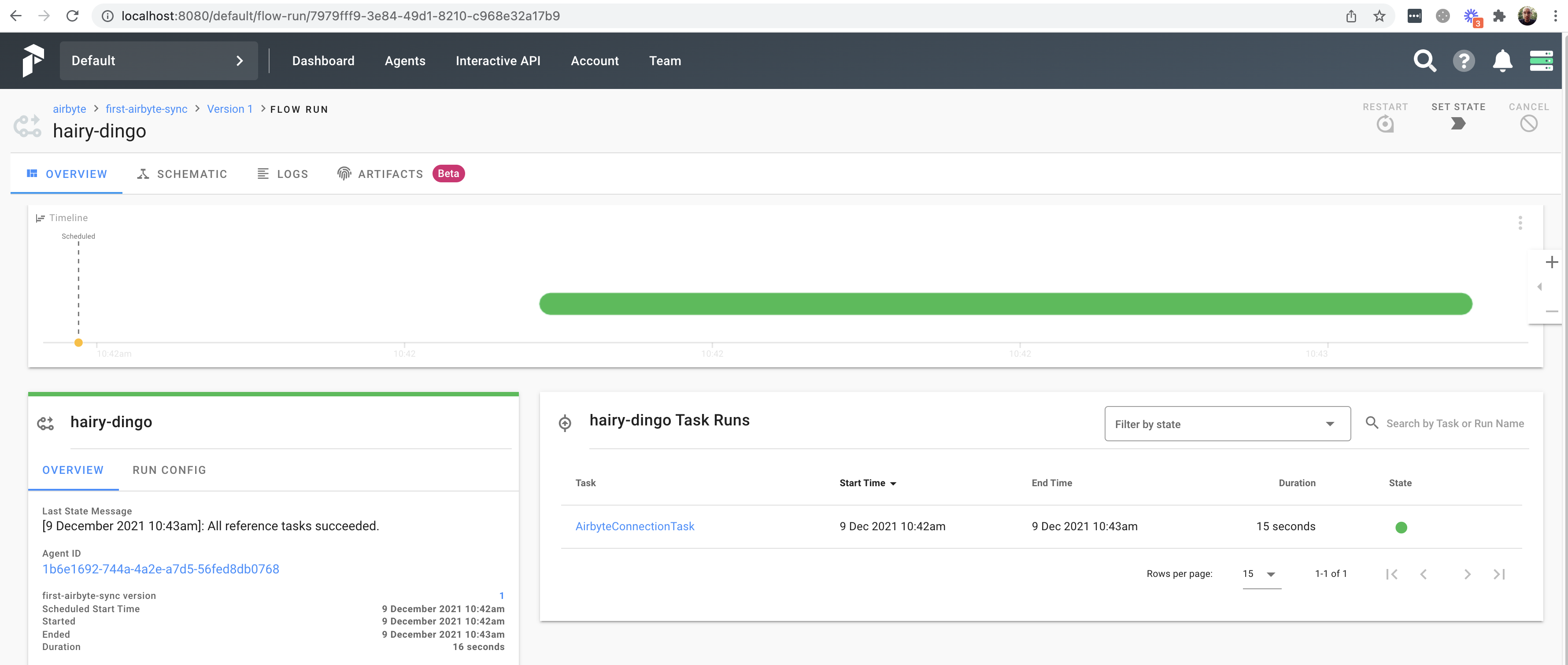

#4 Using the Prefect Airbyte Task | Airbyte Documentation

#5 How Can I remove flow/task runs stuck in a running or canceling state

#6 Can one add context in prefect2 during the flow? - Archive - Prefect

#7 An Introduction to Your First Prefect Flow - YouTube

#8 Sanoj Thomas on Twitter: " :