Web scraping is a almighty method for extracting information from web sites, but galore websites necessitate logins earlier you tin entree the invaluable accusation. This weblog station volition usher you done creating a Python book utilizing Selenium WebDriver to automate the login procedure and extract information from a website utilizing Chrome. We volition screen the indispensable steps, libraries, and champion practices to aid you efficaciously scrape information down login partitions.

Automating Website Login with Selenium and Python

Selenium is a almighty browser automation model that allows you to power a web browser programmatically. This means you tin compose codification that simulates person actions similar navigating to pages, filling types, clicking buttons, and interacting with website elements. By combining Selenium with Python’s robust libraries, we tin make a book that automatically logs into a website, navigates to the desired leaf, and extracts the required information. This eliminates the tedious handbook procedure of logging successful and copying information all clip you demand it. Utilizing Selenium besides ensures consistency and accuracy successful information extraction, minimizing quality mistake. Retrieve, ever regard the website’s status of work and robots.txt earlier attempting to scrape information.

Mounting ahead your Improvement Situation

Earlier you commencement, ensure you person the essential instruments installed. Archetypal, instal Python. Adjacent, you’ll demand to instal the Selenium room utilizing pip: pip instal selenium. You’ll besides demand a webdriver – a programme that controls the browser. Obtain the due webdriver for your browser (similar ChromeDriver for Chrome) and brand certain it’s successful your scheme’s Way situation adaptable oregon specify its way successful your book. Eventually, see utilizing a digital situation to negociate your task dependencies. This retains your task remoted and avoids conflicts with another initiatives.

Extracting Information Station-Login: A Measure-by-Measure Usher

This conception outlines the procedure of gathering a Python book that logs into a website and extracts information. We volition direction connected a broad attack, adaptable to assorted web sites. The circumstantial selectors (IDs, people names, XPath) volition demand accommodation based connected the website’s HTML construction. Ever inspect the website’s HTML utilizing your browser’s developer instruments to place the accurate selectors for the elements you are focusing on. Inspecting the elements volition beryllium important for close focusing on of your information.

Coding the Login and Information Extraction

Present’s a basal illustration. Retrieve to regenerate placeholders similar “username”, “password”, “your_xpath” with the existent values from the mark website. This codification archetypal initiates the Chrome browser, navigates to the login leaf, locates the username and password fields, enters credentials, submits the signifier, and past extracts information utilizing an XPath selector. Mistake dealing with is important to forestall book crashes. Ever see attempt…but blocks to grip possible exceptions.

from selenium import webdriver from selenium.webdriver.communal.by import By from selenium.webdriver.activity.ui import WebDriverWait from selenium.webdriver.activity import expected_conditions arsenic EC operator = webdriver.Chrome() operator.acquire("https://www.illustration.com/login") Regenerate with your login URL attempt: username_field = WebDriverWait(operator, 10).till( EC.presence_of_element_located((By.ID, "username")) ) password_field = operator.find_element(By.ID, "password") Regenerate with due selector username_field.send_keys("your_username") password_field.send_keys("your_password") login_button = operator.find_element(By.XPATH, "//fastener[@kind='subject']") Regenerate with due selector login_button.click on() Delay for the information to burden WebDriverWait(operator, 10).till(EC.presence_of_element_located((By.XPATH, "//your_xpath"))) information = operator.find_element(By.XPATH, "//your_xpath").matter Regenerate with due selector mark(information) but Objection arsenic e: mark(f"An mistake occurred: {e}") eventually: operator.discontinue()

This is a simplified illustration. Existent-planet scenarios frequently necessitate much blase methods, including dealing with dynamic contented, pagination, and assorted types of selectors (ID, people sanction, CSS selector, XPath).

Champion Practices and Considerations

Ever adhere to the website’s status of work. Extreme scraping tin overload a server, truthful beryllium mindful of the charge astatine which you entree the tract. See utilizing methods similar rotating proxies to debar being blocked. Instrumentality mistake dealing with to gracefully grip unexpected conditions. Regularly replace your Selenium and webdriver variations to payment from bug fixes and show improvements. Usage due selectors to precisely mark the desired information; XPath is a almighty but possibly dilatory method, see CSS selectors for sooner show once due.

Ethical Web Scraping

Liable web scraping is important. Larn much astir ethical web scraping to debar ineligible points and keep bully relationships with website owners. Ever cheque a tract’s robots.txt record to seat which parts of the tract are disconnected-limits to scrapers. Regard the tract’s status of work and debar overloading their servers. If you’re uncertain astir thing, it’s ever champion to err connected the broadside of warning.

Decision

Gathering a Python book to extract information from a website last logging successful utilizing Selenium WebDriver is a invaluable accomplishment for information acquisition. Retrieve to ever regard the website’s status of work and robots.txt. By pursuing the steps and champion practices outlined successful this usher, you tin effectively and ethically extract the information you demand. Commencement practising with elemental web sites and gradually sort out much analyzable scenarios. Larn much astir Selenium to grow your web scraping capabilities. Blessed scraping!

#1 How to scrape HTML data using Python Libraries? - ITS

#2 Geospatial Solutions Expert: Processing HTML form data with Python and

#3 Sending POST Requests to Python FastAPI from Another Python Script

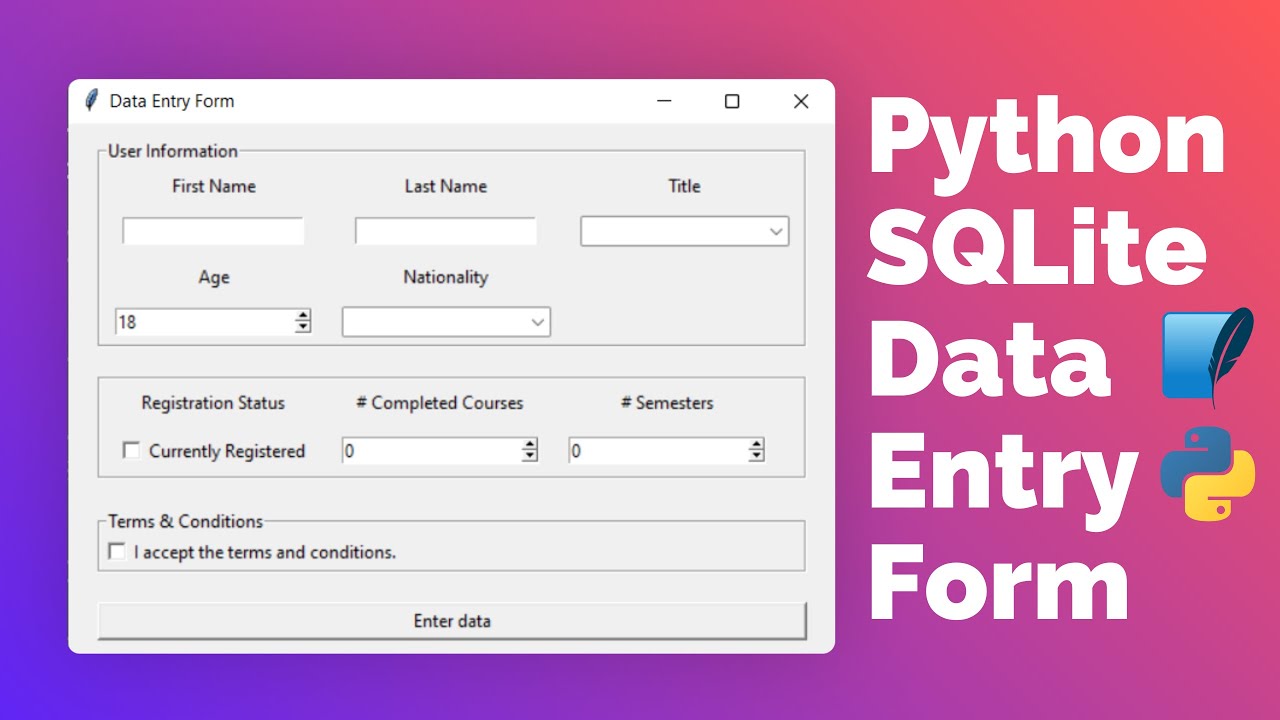

#4 Python SQLite Data Entry Form with Tkinter tutorial for beginners

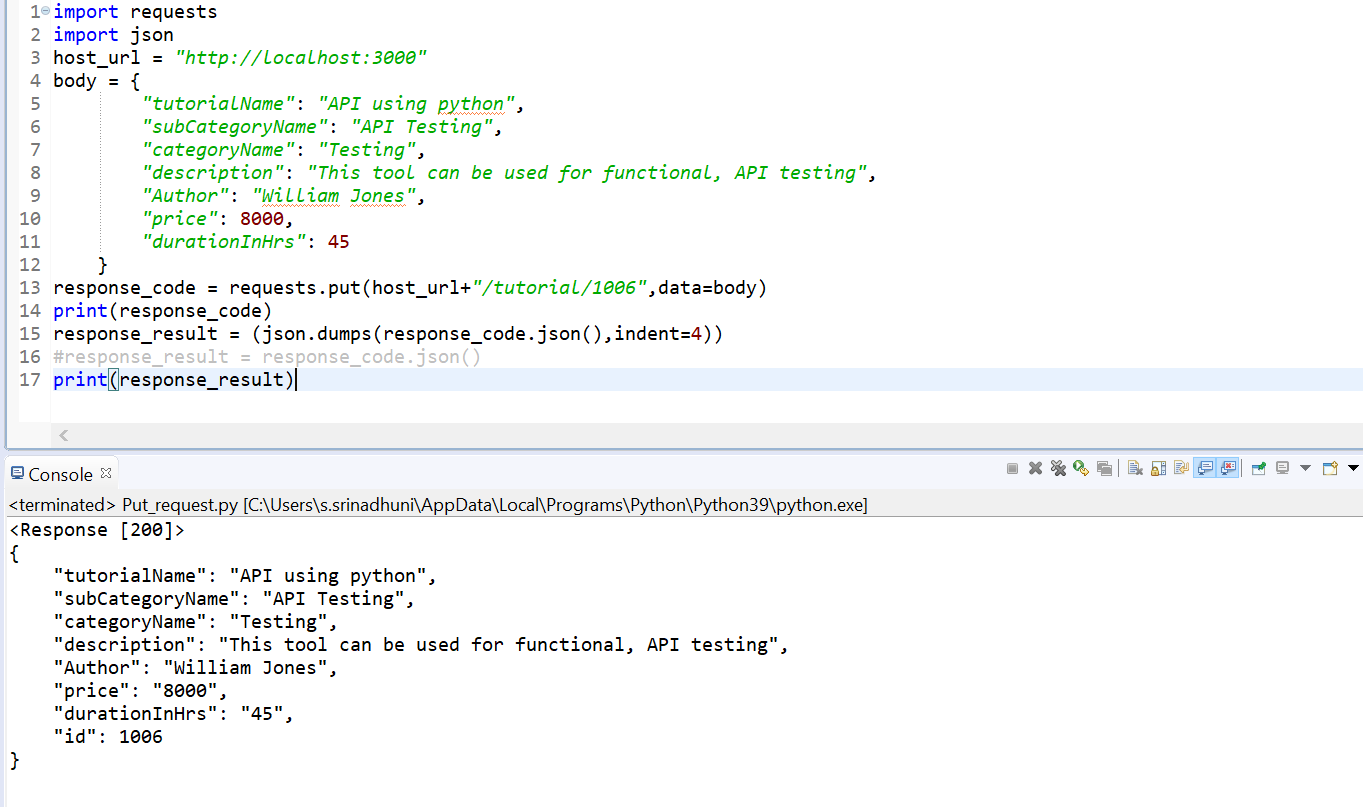

#5 API Testing Using Python Script

#6 A fantastic python script to extract specified/required data from PDF

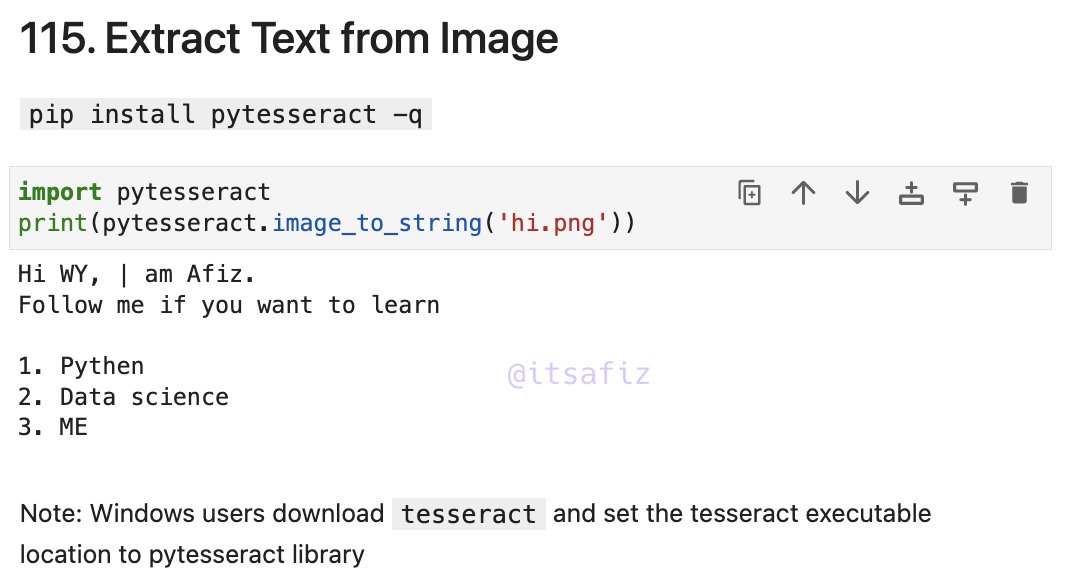

#7 Extract Text from Images using Python In the previous post, we have

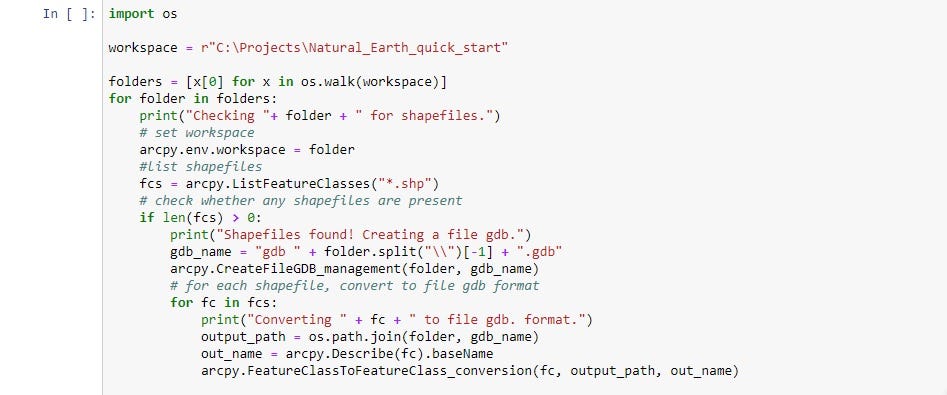

#8 ArcGIS Pro Tutorial: Creating a Script Tool from a Standalone Python