Dealing with unexpected outcomes from PyTorch’s softmax relation tin beryllium irritating, especially once running connected captious NLP duties. This station delves into communal causes wherefore torch.nn.functional.softmax mightiness food inaccurate outputs and presents applicable options to troubleshoot and resoluteness these points. Knowing these problems is important for gathering robust and dependable heavy studying fashions.

Unexpected Softmax Behaviour successful PyTorch

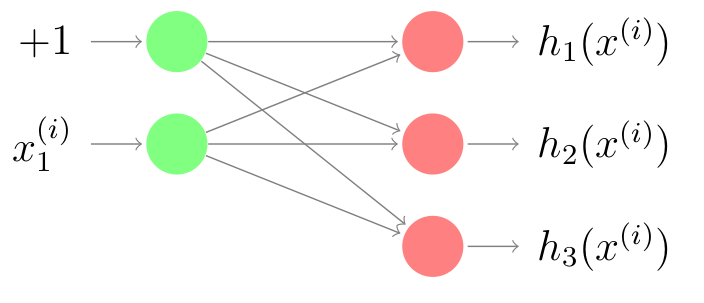

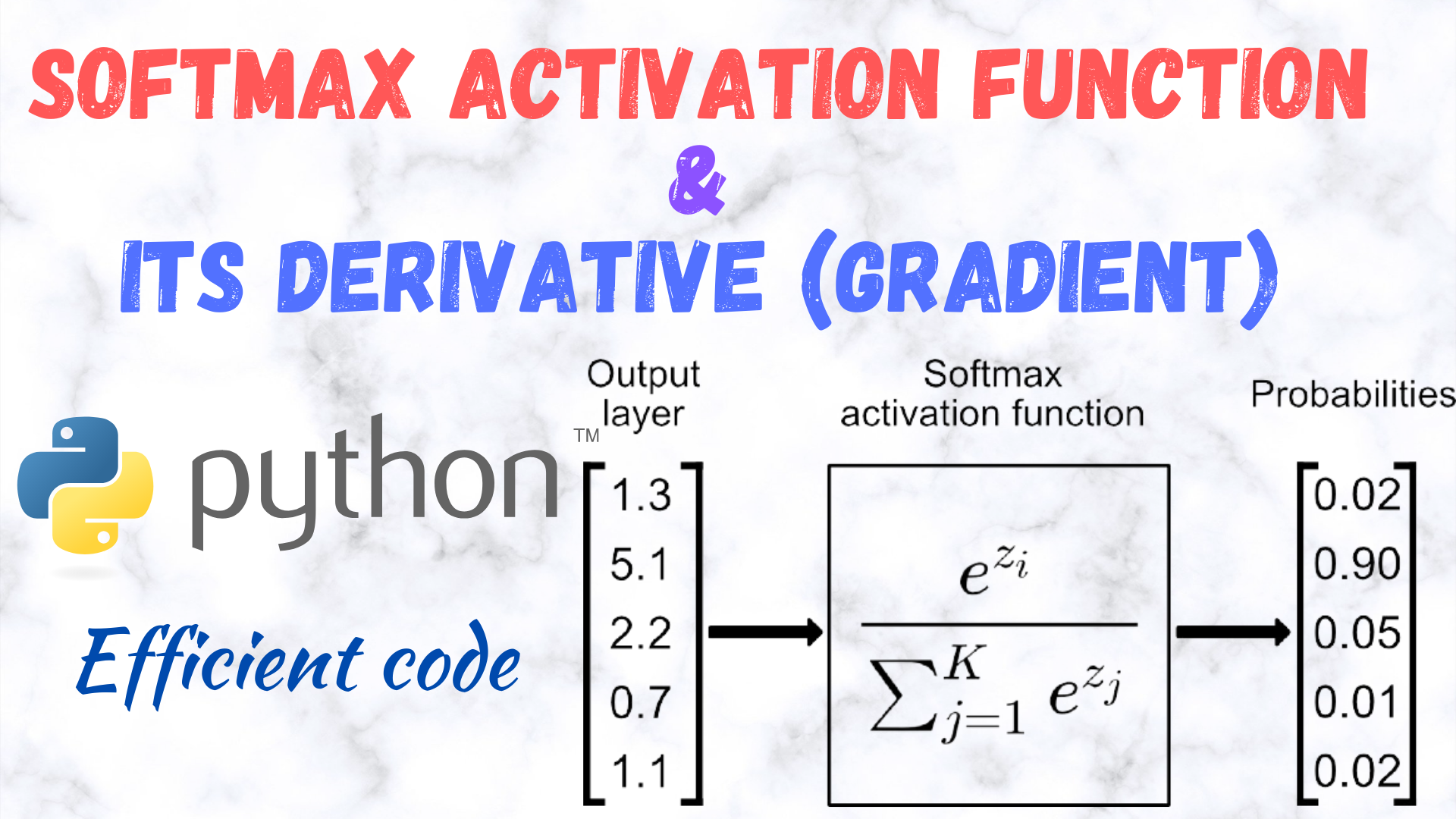

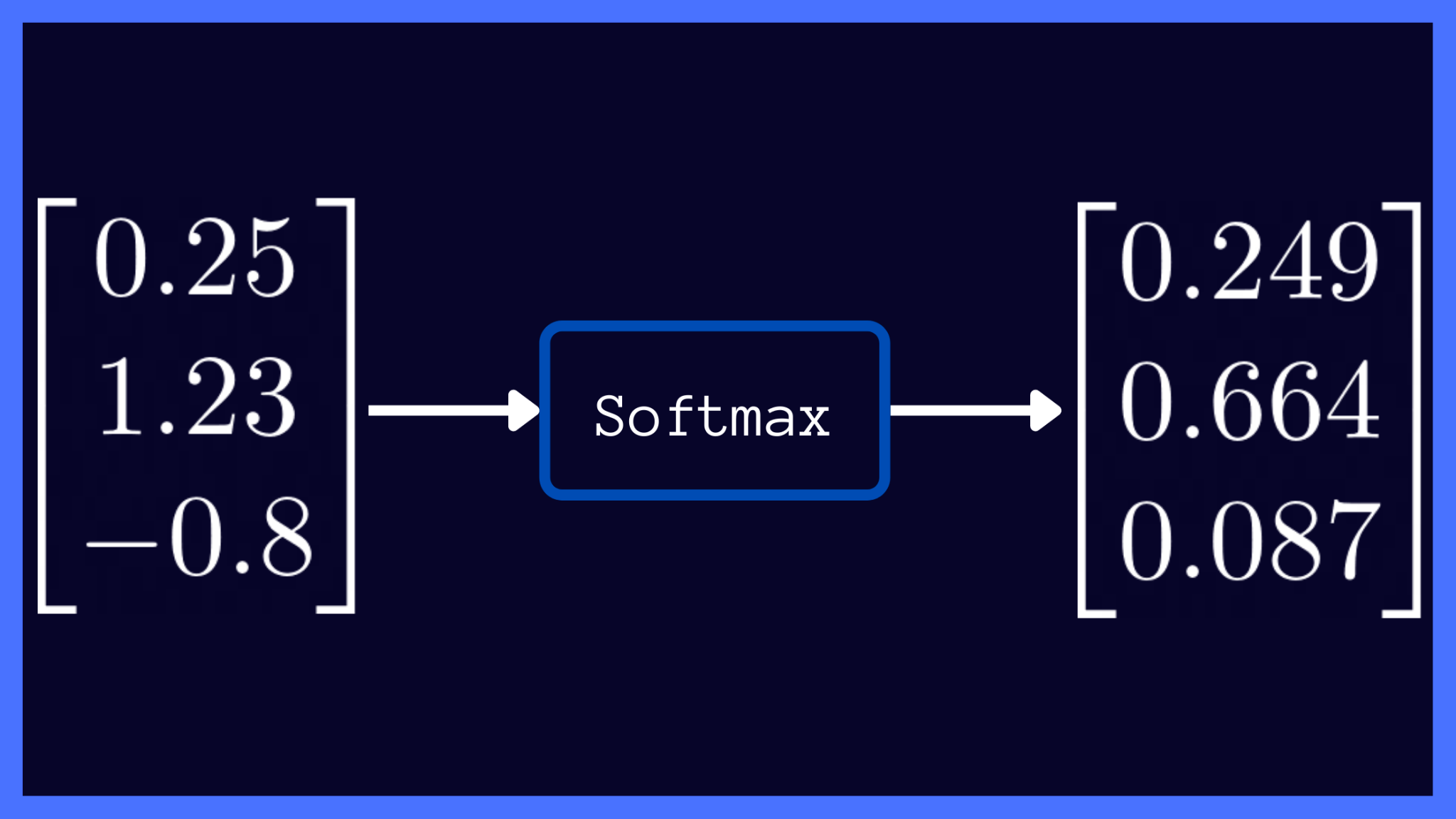

The torch.nn.functional.softmax relation, a cornerstone of galore heavy studying fashions, is designed to person a vector of arbitrary existent numbers into a probability organisation. All component successful the output vector represents the probability of a fixed people, ensuring that the probabilities sum to one. Nevertheless, assorted elements tin pb to outputs that don’t behave arsenic anticipated, possibly throwing disconnected exemplary accuracy and explanation. Numerical instability, incorrect enter dealing with, and unexpected interactions with another parts of your exemplary are premier suspects. Knowing these sources of inaccuracy is captious for debugging and ensuring the reliability of your outcomes. We volition research these points successful much item beneath, offering applicable options and strategies for avoiding them. Appropriate usage of softmax is critical for assorted functions, including sentiment investigation and matter classification, wherever close probability estimations are indispensable.

Numerical Instability and Overflow Errors

One communal ground for seemingly inaccurate softmax outputs is numerical instability. Once dealing with precise ample oregon precise tiny numbers, the exponential relation utilized successful softmax (ex) tin pb to overflow oregon underflow errors. Overflow occurs once the exponent turns into excessively ample for the information kind to correspond, resulting successful inf (infinity), piece underflow outcomes successful 0. This tin distort the probability organisation, making the outputs inaccurate. The resolution frequently includes methods similar subtracting the maximum value from the enter vector earlier making use of the exponential relation, a communal stabilization method employed successful galore softmax implementations. This prevents highly ample values from dominating the calculation, starring to much numerically unchangeable outcomes.

Incorrect Enter Dealing with

Different origin of problems lies successful the enter supplied to the softmax relation. If the enter tensor contains NaN (Not a Figure) oregon inf values, the softmax relation volition food nonsensical outputs. Ever ensure that your enter information is correctly pre-processed and escaped from specified misguided values. Robust information preprocessing is important. Cautious information cleansing and validation steps, specified arsenic checking for invalid values and dealing with lacking information, tin forestall unexpected behaviour. Regularly inspecting your enter information for anomalies tin better the accuracy of your outcomes and finally the reliability of your exemplary’s predictions.

Troubleshooting Strategies for Inaccurate Softmax

If you’re encountering unexpected behaviour from your softmax relation, systematic debugging is cardinal. Archetypal, cautiously analyze your enter information. Confirm that it’s inside the anticipated scope and doesn’t incorporate immoderate invalid values. 2nd, cheque your exemplary’s structure and ensure location are nary unintended interactions with another layers that mightiness corrupt the enter to the softmax. Third, see implementing numerical stabilization methods, specified arsenic subtracting the maximum value earlier exponentiation. This is a often utilized method to circumvent overflow oregon underflow points successful softmax calculations.

Using Numerical Stabilization Strategies

A communal method to better the numerical stableness of softmax is to subtract the maximum value of the enter vector earlier making use of the exponential relation. This prevents highly ample values from dominating the computation. This elemental measure tin drastically better the accuracy and reliability of the softmax output, especially once dealing with advanced-dimensional vectors oregon these containing values with a ample dynamic scope. See this a cardinal measure successful optimizing your softmax implementation for amended show and robustness.

| Method | Statement | Advantages | Disadvantages |

|---|---|---|---|

| Subtracting Maximum | Subtract the maximum value from the enter vector earlier making use of the exponential relation. | Improved numerical stableness, prevents overflow/underflow. | Somewhat much computationally costly. |

| Log-Sum-Exp | Makes use of the log-sum-exp device for improved numerical stableness. | Extremely unchangeable, avoids overflow/underflow. | Much analyzable to instrumentality. |

Retrieve to ever validate your outcomes. Comparison the softmax outputs to your expectations and confirm that they correspond a legitimate probability organisation (values betwixt 0 and 1, sum to 1). If inaccuracies persist, see consulting the PyTorch documentation and looking for aid from the assemblage through boards similar PyTorch Boards oregon Stack Overflow.

Decision

Piece torch.nn.functional.softmax is a almighty implement, knowing possible pitfalls and implementing robust troubleshooting strategies is important for acquiring close outcomes. By cautiously inspecting your enter information, employing numerical stabilization methods, and thoroughly validating your outputs, you tin physique dependable and close heavy studying fashions. Addressing numerical instability and dealing with possible enter errors are cardinal steps in direction of ensuring the accuracy and reliability of your softmax calculations. Retrieve to ever seek the advice of the authoritative PyTorch documentation and assemblage assets for further aid and champion practices. For a deeper dive into numerical stableness successful device studying, you mightiness discovery assets connected Heavy Studying adjuvant.

#1 Efficient implementation of Softmax activation function and its

#2 Softmax | What is Softmax Activation Function | Introduction to Softmax

#3 ncnnsoftMaxtorch.nn.functional softMax Issue #4109 Tencent

#4 torch.nn.Softmax( )torch.nn.functional.softmax( )_nn.softmax nn

#5 Softmax Activation Function: Everything You Need to Know | Pinecone

#6 What is the Softmax-Function? | Data Basecamp

#7 torch.nn.Softmax()torch.nn.functional.softmax()_from torch.nn

#8 Gradients of Softmax Output Layer in Gory Details Hendra Bunyamin