Redeeming aggregate Pandas DataFrames to a azygous JSON record is a communal project successful information processing and investigation. This blanket usher volition locomotion you done assorted methods and champion practices, ensuring your information is saved effectively and tin beryllium easy retrieved. We’ll screen antithetic approaches, detail their advantages and disadvantages, and supply applicable examples to aid you take the champion method for your circumstantial needs. Knowing however to efficaciously negociate and shop your DataFrame information is important for sustaining information integrity and streamlining your workflow. This station volition direction chiefly connected Python and its almighty Pandas room.

Effectively Serializing Aggregate DataFrames into a Azygous JSON Record

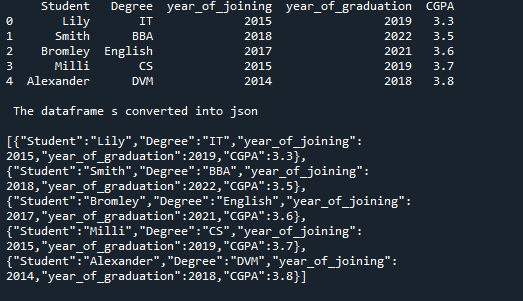

The about straightforward attack includes creating a Python dictionary wherever keys correspond DataFrame names and values are the corresponding DataFrame information (frequently transformed to a database of dictionaries). This dictionary tin past beryllium easy serialized to a JSON record. This method affords flexibility and readability, allowing for casual recognition and retrieval of idiosyncratic DataFrames. Nevertheless, it’s important to see the dimension of your DataFrames; highly ample datasets mightiness pb to excessively ample JSON information, possibly impacting show. This method affords large power, especially once dealing with heterogeneous DataFrames.

Structuring Your Information for Optimum JSON Serialization

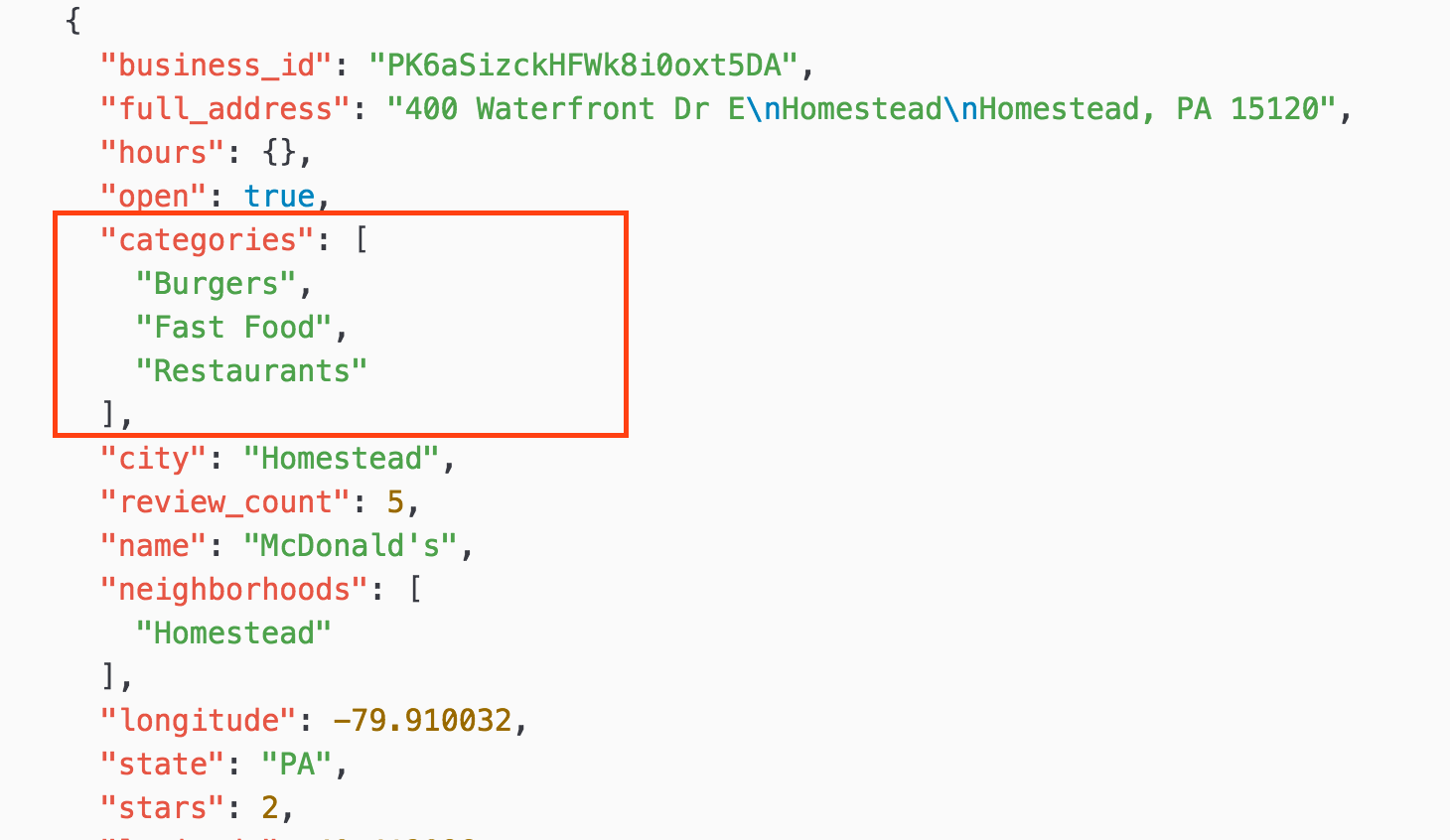

Earlier converting your DataFrames to JSON, cautiously see the information construction. Ensure that your information types are suitable with JSON (e.g., debar unsupported types similar NumPy’s datetime64). Converting your DataFrame to a database of dictionaries is mostly a bully attack, arsenic this construction translates fine to JSON. For analyzable nested buildings, see utilizing a much robust JSON room that tin grip much blase information types. Retrieve to grip possible errors gracefully, specified arsenic these associated to incompatible information types.

Alternate Approaches to Dealing with Aggregate DataFrames

Piece the dictionary-based attack is communal, options be. One could see redeeming all DataFrame to a abstracted JSON record and past creating a maestro JSON record containing hyperlinks oregon metadata pointing to all idiosyncratic record. This attack is utile once dealing with precise ample DataFrames, enhancing retrieval velocity by avoiding loading monolithic JSON records-data. Nevertheless, it increases record direction complexity and requires a fine-defined naming normal. This mightiness beryllium preferable for bigger datasets, enhancing scalability and show.

Evaluating Antithetic Serialization Strategies

| Method | Advantages | Disadvantages |

|---|---|---|

| Azygous JSON Record (Dictionary) | Elemental, casual to publication, each information successful one spot | Tin make ample information for immense datasets, slower loading occasions |

| Aggregate JSON Information with Scale | Amended for ample datasets, quicker loading occasions for idiosyncratic DataFrames | Accrued record direction overhead, much analyzable construction |

Applicable Implementation: A Python Illustration

Fto’s exemplify the dictionary attack with a codification illustration:

import pandas arsenic pd import json df1 = pd.DataFrame({'A': [1, 2, 3], 'B': [4, 5, 6]}) df2 = pd.DataFrame({'C': [7, 8, 9], 'D': [10, 11, 12]}) information = {'df1': df1.to_dict('data'), 'df2': df2.to_dict('information')} with unfastened('dataframes.json', 'w') arsenic f: json.dump(information, f, indent=4)

This codification snippet demonstrates however to serialize two DataFrames (df1 and df2) into a azygous JSON record named dataframes.json. The to_dict('data') method converts all DataFrame to a database of dictionaries earlier serialization, optimizing the JSON construction. The indent=4 parameter enhances readability by including indentation to the JSON output.

Champion Practices and Considerations

Ever see information compression strategies to reduce record sizes, especially for ample datasets. Libraries similar gzip oregon zlib tin beryllium utilized successful conjunction with JSON serialization to trim retention abstraction. For exceptionally ample datasets, see utilizing a much businesslike information retention format similar Parquet oregon Feather, which are optimized for tabular information.

- Take the correct method based connected dataset dimension and complexity.

- Ever validate the JSON output to ensure information integrity.

- See utilizing a interpretation power scheme to path modifications.

For much precocious JSON dealing with, research the ujson room, which frequently supplies important show improvements. For blanket information investigation and manipulation, studying much astir the Pandas room is important. Eventually, exploring antithetic unreality retention options similar AWS S3 oregon Google Unreality Retention mightiness beryllium essential for highly ample datasets. Larn much astir unreality retention options.

Decision

Redeeming a database of DataFrames to JSON effectively includes cautious readying and information of information dimension and construction. The dictionary-based attack presents a elemental and effectual resolution for galore usage circumstances. Nevertheless, for bigger datasets, alternate methods similar aggregate JSON information with an scale mightiness beryllium much suitable. By knowing these antithetic approaches and champion practices, you tin efficaciously negociate and sphere your DataFrame information, ensuring seamless information entree and investigation successful your tasks. Commencement experimenting with antithetic methods present!

#1 Convert Python List of Dataframes to JSON Format - Python Tutorial

#2 How To Convert A DataFrame To JSON Array In Python | DataFrame To JSON

#3 How To Convert A DataFrame To JSON Array In Python | DataFrame To JSON

#4 How To Convert A DataFrame To JSON In Python? [4 Ways] - Python Guides

![Saving Multiple Pandas DataFrames to JSON Files in Python A Comprehensive Guide - How To Convert A DataFrame To JSON In Python? [4 Ways] - Python Guides](https://pythonguides.com/wp-content/uploads/2024/02/dataframe-to-json-in-Python-pandas.jpg)

#5 If the preview looks OK, click the Save button to save it as a data frame

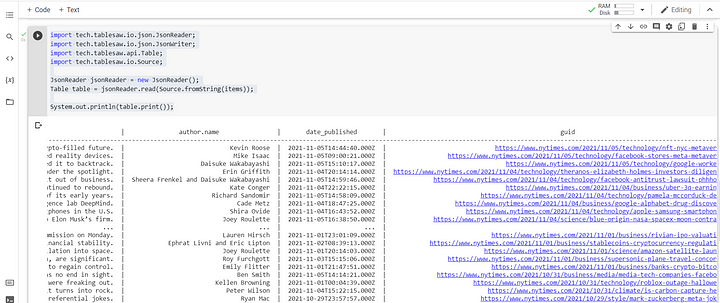

#6 Dataframes & JSON with Java on Jupyter | by Gary Sharpe | Medium

#7 Convert Json List To Pandas Dataframe - Printable Online

#8 Working with JSON data in very simple way learn data science